What is the detail principle of Experience Map: Component, Transition, Creation, Maintenance in RatSLAM?

The excerpt note is about Experience Map: Component, Transition, Creation, Maintenance, from Michael 2008.

Michael Milford. Robot Navigation from Nature: Simultaneous Localisation, Mapping, and Path Planning Based on Hippocampal Models. Springer-Verlag Berlin Heidelberg Press, pp. 129-143, 149-150, 2008.

I. A Map Made of Experiences

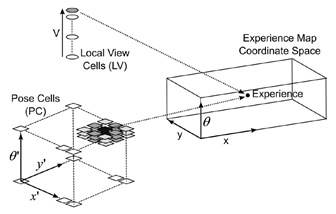

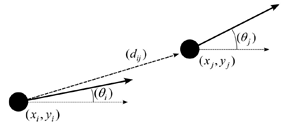

Activity in the pose cells and local view cells drives the creation of experiences. Each experience represents a snapshot of the activity within the pose cells and local view cells at a certain time. When the set of existing experiences is insufficient for describing the pose and local view cells’ activity state, a new experience is created. Fig. 1 shows an experience and how it is associated with certain pose and local view cells.  ,

, , and

, and  describe the location of the cells within the pose cell matrix associated with the experience, and

describe the location of the cells within the pose cell matrix associated with the experience, and  describes the local view cell associated with the experience.

describes the local view cell associated with the experience.

Fig 1 Experience map co-ordinate space. An experience is associated with certain pose and local view cells, but exists within the experience map’s own  co-ordinate space.

co-ordinate space.

Each experience also has its own  state which describes its location within the co-ordinate space of the experience map. This co-ordinate space is completely separated from the pose and local view cell co-ordinate spaces. The first experience learned is initialised with an arbitrary

state which describes its location within the co-ordinate space of the experience map. This co-ordinate space is completely separated from the pose and local view cell co-ordinate spaces. The first experience learned is initialised with an arbitrary  position within the experience map. Subsequence experiences are assigned a position based on the last experience’s position and the robot movement that has occurred since.

position within the experience map. Subsequence experiences are assigned a position based on the last experience’s position and the robot movement that has occurred since.

Experiences have an activation level that depends on how close the energy peaks in the pose and local view networks are to the cells associated with each experience. Each experience has a zone of association within the pose cells and local view cells. When the energy peaks in each network are within these zones, the experience is activated. Within the pose cells the zones are continuous, whereas within the local view cells the zone is discrete.

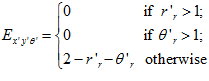

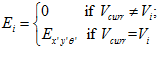

The component of an experience’s energy level determined by the current pose cell activity,  , is calculated by:

, is calculated by:

Where  ,

,  , and

, and  are the co-ordinates of the maximally active pose cell,

are the co-ordinates of the maximally active pose cell,  ,

,  , and

, and  are the co-ordinates of the pose cells associated with the experience,

are the co-ordinates of the pose cells associated with the experience,  is the zone constant for the

is the zone constant for the  plane, and

plane, and  is the zone constant for the

is the zone constant for the  dimension.

dimension.

The visual scene  acts like a switch for the experience, turning it on or off. The total energy level of the

acts like a switch for the experience, turning it on or off. The total energy level of the  experience,

experience,  , is given by:

, is given by:

Where  is the visual scene, and

is the visual scene, and  is the visual scene associated with experience

is the visual scene associated with experience  .

.

RatSLAM continuously monitors the energy levels of the experiences. If no experiences have a positive energy level, this indicates that no existing experiences sufficiently represent the current pose and local view cell activity. In such a situation a new experience is generated to represent the current activity state of the pose and local view.

II. Linking Experiences: Spatially, Temporally, Behaviourally

As the robot moves around the environment, the experience mapping algorithm also learns experience transitions. Transitions store information about the physical movement of the robot between one experience and another, as well as the movement behaviour used during the transition and the time duration of the transition. This information is used to perform experience map correction and is also used by the goal recall process.

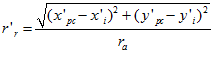

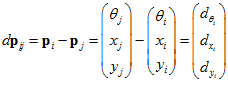

Fig. 2 shows a transition from experience  to experience

to experience  . The robot’s change in pose state between experiences,

. The robot’s change in pose state between experiences,  , is calculated by comparing the odometric pose of the robot before and after the transition:

, is calculated by comparing the odometric pose of the robot before and after the transition:

Where  and

and  are vectors describing the pose of the robot according to odometry before and after the transition. This equation is only applicable when experience

are vectors describing the pose of the robot according to odometry before and after the transition. This equation is only applicable when experience  is first created, with its

is first created, with its  pose determined by the robot pose associated with experience

pose determined by the robot pose associated with experience  and the robot’s movement during the transition.

and the robot’s movement during the transition.

Fig. 2 A transition between two experiences. Shaded circles and bold arrows show the actual pose of the experiences.  is the odometric distance between the two experiences.

is the odometric distance between the two experiences.

As the robot navigates familiar parts of the environment, old experience transitions may be repeated, but with different odometric information about the transition. The new information about the transition,  , is incorporated through an averaging process:

, is incorporated through an averaging process:

Where

Two other variables are used to store temporal and behavioural information.  stores the time taken for the robot to transition between the two experiences.

stores the time taken for the robot to transition between the two experiences.

This temporal link variable is used to form the temporal map. To create the temporal map, the current active experience (representing the robot’s current location) is seeded with a zero time stamp value, and all other experiences are assigned a ‘very large’ value. Time stamp values are then assigned to linked experiences based on the current experience’s time stamp and the temporal link information. This process is iterated, with any experience A only updating experience B if they are linked and if experience B’s time stamp is larger. The updated time stamp value,  , is given by:

, is given by:

Where  is the time stamp value of experience

is the time stamp value of experience  ,

,  is the temporal link from experience

is the temporal link from experience  to experience

to experience  ,

,  is the resultant proposed time stamp value of experience

is the resultant proposed time stamp value of experience  ,

, is the set of proposed time stamp values for experience

is the set of proposed time stamp values for experience  , and

, and  is the iteration number. The map creation process stops when the time stamp values of all the experiences reach a stable state.

is the iteration number. The map creation process stops when the time stamp values of all the experiences reach a stable state.

By propagating time stamps from the robot’s current location and only updating experience time stamps to lower values, the temporal map creation process always produces one increasing path of experience time stamp values from the robot location to the goal. The path is guaranteed to represent the shortest time duration route to the goal, by virtue of the final map stability condition, which indicates no experience can be reached any more quickly in time than as indicated by its current time stamp value. If there are two routes to the goal that are exactly equal in temporal length, the temporal map will have two increasing time stamp paths; however, in practice, this is unlikely to occur. In this situation the robot can use either route to navigate successfully to the goal.

The experience map is not necessarily completely interconnected, so a temporal map may not include all the experiences in it. Once the robot starts navigating to the goal it may come across and activate experiences not involved in the original temporal map. In such a situation the robot forms a new temporal map that may not include the original route, but which may also have a different shortest route to the original. The route following mechanism is structured so that if the new route is longer the robot remembers and continues to follow the old shorter route for a fixed period of time, before switching to the new route. If the new route is shorter the robot switches to it immediately and hence uses the new information to reach the goal more rapidly.

stores the local movement behaviour that was used during the transition. The behavioural link information is used to update the experience map when the environment changes using the method. And is also used to aid exploration of the environment using the method.

stores the local movement behaviour that was used during the transition. The behavioural link information is used to update the experience map when the environment changes using the method. And is also used to aid exploration of the environment using the method.

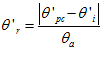

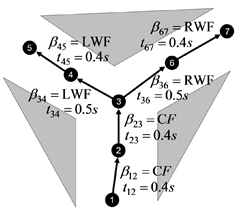

Fig 3 shows an example of the behavioural and temporal information learned through the experience mapping algorithm. By using the algorithm, the robot is, in effect, learning routes through the environment in a robot friendly format. From the robot’s perspective, a route is a sequence of experiences that can be achieved in order by using the appropriate movement behaviours between experiences. For example, at the fork, the route that the robot follows depends on whether it uses the left wall following (LWF) or right wall following (RWF) local movement behaviour. The robot also has a concept of how long a route typically takes to traverse, as encoded by the temporal transition information between experiences. Sections of a route where the robot is forced to turn may take longer to traverse than straight sections.

Fig. 3 An example of the behavioural and temporal information. The numbered circles represent experiences, and CF, LWF, and RWF are movement behaviours: CF – Centreline Following; LWF – Left Wall Following; RWF – Right Wall Following.

III. Map Correction

When the robots sees familiar visual scenes after spending time in a novel part of the environment, visual activity is injected into the pose cell matrix, causing the robot to re-localise its perceived pose. This causes the robot’s associated location within the experience map (given by the maximally active experience) to jump from the new experience it has most recently learned to a previously learnt experience. The experience mapping algorithm learns this new transition, which contains a large discrepancy between the transition’s relative spatial information and the difference between the two experiences  co-ordinates in the experience map. For example, two experiences may be positioned several metres apart in the experience map but be linked by transitions encoding much smaller distances.

co-ordinates in the experience map. For example, two experiences may be positioned several metres apart in the experience map but be linked by transitions encoding much smaller distances.

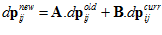

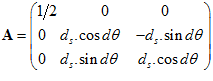

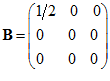

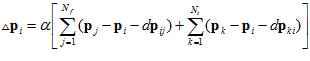

By minimising the discrepancies between relative experience poses and inter-experience spatial transition information, the experience map can become locally representative of the environment’s spatial arrangement. This can be achieved by consolidating the expected pose of each experience based on relative odometric information and their current pose. Under this correction process, the change in experience pose,  , is given by:

, is given by:

Where  is a learning rate constant,

is a learning rate constant,  is the number of links from experience

is the number of links from experience  to other experiences, and

to other experiences, and  is the number of links from other experiences to experience

is the number of links from other experiences to experience  . The application of equation to all the experiences in the experience map is dubbed the experience map correction process.

. The application of equation to all the experiences in the experience map is dubbed the experience map correction process.

The map correction process is subjected to the same constraints of any network stytle learning system – appropriate learning rates must be used to balance rapid convergence with instability. Experimentation determined that a learning rate of  results in the map rapidly converging to a stable state.

results in the map rapidly converging to a stable state.

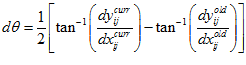

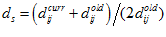

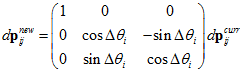

When the orientation of an experience is changed through the map correction process, the  component of its transitional information must also be updated to account for the rotation. The updated transitional information,

component of its transitional information must also be updated to account for the rotation. The updated transitional information,  , is given by:

, is given by:

Where  is the change in the orientation of the experience.

is the change in the orientation of the experience.

IV. Map Adaptation and Long Term Maintenance

The experience maps represent changes in the environment through modification of the inter-experience transition information. As well as learning experience transitions, the system also monitors transition ‘failures’. Transition failures occur when the robot’s current experience switches to an experience other than the one expected given the robot’s current movement behaviour. If enough failures occur for a particular transition, indicated by the confidence level dropping below a certain threshold, then that link is deleted from the experience map. The confidence level,  , is given by:

, is given by:

Where  is the number of times the transition between experience

is the number of times the transition between experience  and

and  has occurred, and

has occurred, and  is the number of times a transition from experience

is the number of times a transition from experience  to any experience using the behaviour

to any experience using the behaviour  has occurred.

has occurred.

In a fixed size environment it is desirable that the experience mapping algorithm limit the number of experiences in the map. The number of experiences needs to remain limited to ensure that the map correction process is computable in real-time.

In order to limit the size of the experience map, it is necessary to prune redundant experiences. The RatSLAM system in combination with the experience mapping algorithm has no global Cartesian sense of space, so it is difficult to identify redundant map representations. For instance, differentiating between redundancy and dynamic change in the environment is a very challenging task. The development of an experience map pruning technique involves a number of heuristic decisions:

-

Should the system unlearn parts of the environment it can no longer access and how can it identify such situations?

-

What is the difference between a valid representation of a currently inaccessible location and redundant representation of an existing location?

-

In general how should the system determine experience importance in order to prioritise the retention of experiences?

For the RatSLAM system and experience mapping algorithm there are possible approaches – prune through spatial analysis of the experience map structure, or prune through weeding of one-off experiences. The second approach was taken in the initial implementation of a map maintenance module.

When the experience map reaches the allocated maximum size, the pruning algorithm starts removing experiences. The algorithm searches for experiences that have only been experienced once by the robot during the experiment, and selects the experience from this group with the oldest activation time stamp. By pruning these experiences, the robot forgets parts of the map which have never been verified by a second pass, but keeps representations that have been reinforced by multiple passes. The temporal selectivity ensures that older once-off experiences will be unlearned rather than those the robot has just learned exploring a newly discovered room. Due to battery life constraints, this pruning algorithm was never fully tested on a robot, although it was found to work well in tests of up to 2 hours duration.

For further info, please read the Michael 2008.

Michael Milford. Robot Navigation from Nature: Simultaneous Localisation, Mapping, and Path Planning Based on Hippocampal Models. Springer-Verlag Berlin Heidelberg Press, pp. 129-143, 149-150, 2008.

About

Brain Inspired Navigation Blog

New discovery worth spreading on brain-inspired navigation in neurorobotics and neuroscience

Recent Posts

- How locomotor development shapes hippocampal spatial coding?

- How human, animals, robots encode and recall place?

- How the brain constructs time and space and how these are related to episodic memory?

- How environmental novelty modulate rapid cortical plasticity during navigation?

- How the Hippocampal Cognitive Map Supports Flexible Navigation?

Tags

Categories

- 3D Movement

- 3D Navigation

- 3D Path Integration

- 3D Perception

- 3D SLAM

- 3D Spatial Representation

- AI Navigation

- Bio-Inspired Robotics

- Brain Inspired Localization

- Brain-Inspired Navigation

- Cognitive Map

- Cognitive Navigation

- Episodic Memory

- Excerpt Notes

- Flying Vehicle Navigation

- Goal Representation

- Insect Navigation

- Learning to Navigate

- Memory

- Neural Basis of Navigation

- Path Integration

- Path Planning

- Project

- Research Tips

- Robotic Vision

- Self-Flying Vehicles

- Semantic Memory

- Spatial Cognition

- Spatial Cognitive Computing

- Spatial Coordinate System

- Spatial Learning

- Spatial Memory

- Spatial Resoning

- Time

- Unclassified

- Visual Cortex

- Visual Cue Cells

Links

- Laboratory of Nachum Ulanovsky

- Jeffery Lab

- BatLab

- The NeuroBat Lab

- Taube Lab

- Laurens Group

- Romani Lab

- Moser Group

- O’Keefe Group

- DoellerLab

- MilfordRobotics Group

- The Space and Memory group

- Angelaki Lab

- Spatial Cognition Lab

- McNaughton Lab

- Conradt Group

- The Fiete Lab

- The Cacucci Lab

- The Burak Lab

- Knierim Lab

- Clark Spatial Navigation & Memory Lab

- Computational Memory Lab

- The Dombeck Lab

- Zugaro Lab

- Insect Robotics Group

- The Nagel Lab

- Basu Lab

- Spatial Perception and Memory lab

- The Neuroecology lab

- The Nagel Lab

- Neural Modeling and Interface Lab

- Memory and Navigation Circuits Group

- Neural Circuits and Memory Lab

- The lab of Arseny Finkelstein

- The Epstein Lab

- The Theoretical Neuroscience Lab

- Gu Lab (Spatial Navigation and Memory)

- Fisher Lab (Neural Circuits for Navigation)

- The Alexander Lab (Spatial Cognition and Memory)

- Harvey Lab (Neural Circuits for Navigation)

- Buzsáki Lab

- Brain Computation & Behavior Lab

- ……