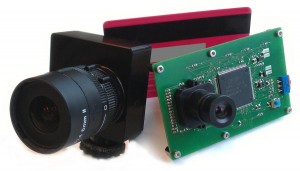

Dynamic Vision Sensor (DVS)

From inilabs.com

DVS Overview

Conventional vision sensors see the world as a series of frames. Successive frames contain enormous amounts of redundant information, wasting memory access, RAM, disk space, energy, computational power and time. In addition, each frame imposes the same exposure time on every pixel, making it difficult to deal with scenes containing very dark and very bright regions.

Conventional vision sensors see the world as a series of frames. Successive frames contain enormous amounts of redundant information, wasting memory access, RAM, disk space, energy, computational power and time. In addition, each frame imposes the same exposure time on every pixel, making it difficult to deal with scenes containing very dark and very bright regions.

The Dynamic Vision Sensor (DVS) solves these problems by using patented technology that works like your own retina. Instead of wastefully sending entire images at fixed frame rates, only the local pixel-level changes caused by movement in a scene are transmitted – at the time they occur. The result is a stream of events at microsecond time resolution, equivalent to or better than conventional high-speed vision sensors running at thousands of frames per second. Power, data storage and computational requirements are also drastically reduced, and sensor dynamic range is increased by orders of magnitude due to the local processing.

Video: Event-based, 6-DOF Pose Tracking for High-Speed Maneuvers using a Dynamic Vision Sensor

Application Areas

- Surveillance and ambient sensing

- Fast Robotics: mobile (e.g. Quadcopter, video from University of Zurich Robotics and Perception group), fixed (e.g. RoboGoalie, video from University of Zurich INI Sensors group)

- Factory automation

- Microscopy

- Motion analysis, e.g. human or animal motion

- Hydrodynamics

- Sleep research and chronobiology

- Fluorescent imaging

- Particle Tracking

More info on the iniLabs website

About

Brain Inspired Navigation Blog

New discovery worth spreading on brain-inspired navigation in neurorobotics and neuroscience

Recent Posts

- How locomotor development shapes hippocampal spatial coding?

- How human, animals, robots encode and recall place?

- How the brain constructs time and space and how these are related to episodic memory?

- How environmental novelty modulate rapid cortical plasticity during navigation?

- How the Hippocampal Cognitive Map Supports Flexible Navigation?

Tags

Categories

- 3D Movement

- 3D Navigation

- 3D Path Integration

- 3D Perception

- 3D SLAM

- 3D Spatial Representation

- AI Navigation

- Bio-Inspired Robotics

- Brain Inspired Localization

- Brain-Inspired Navigation

- Cognitive Map

- Cognitive Navigation

- Episodic Memory

- Excerpt Notes

- Flying Vehicle Navigation

- Goal Representation

- Insect Navigation

- Learning to Navigate

- Memory

- Neural Basis of Navigation

- Path Integration

- Path Planning

- Project

- Research Tips

- Robotic Vision

- Self-Flying Vehicles

- Semantic Memory

- Spatial Cognition

- Spatial Cognitive Computing

- Spatial Coordinate System

- Spatial Learning

- Spatial Memory

- Spatial Resoning

- Time

- Unclassified

- Visual Cortex

- Visual Cue Cells

Links

- Laboratory of Nachum Ulanovsky

- Jeffery Lab

- BatLab

- The NeuroBat Lab

- Taube Lab

- Laurens Group

- Romani Lab

- Moser Group

- O’Keefe Group

- DoellerLab

- MilfordRobotics Group

- The Space and Memory group

- Angelaki Lab

- Spatial Cognition Lab

- McNaughton Lab

- Conradt Group

- The Fiete Lab

- The Cacucci Lab

- The Burak Lab

- Knierim Lab

- Clark Spatial Navigation & Memory Lab

- Computational Memory Lab

- The Dombeck Lab

- Zugaro Lab

- Insect Robotics Group

- The Nagel Lab

- Basu Lab

- Spatial Perception and Memory lab

- The Neuroecology lab

- The Nagel Lab

- Neural Modeling and Interface Lab

- Memory and Navigation Circuits Group

- Neural Circuits and Memory Lab

- The lab of Arseny Finkelstein

- The Epstein Lab

- The Theoretical Neuroscience Lab

- Gu Lab (Spatial Navigation and Memory)

- Fisher Lab (Neural Circuits for Navigation)

- The Alexander Lab (Spatial Cognition and Memory)

- Harvey Lab (Neural Circuits for Navigation)

- Buzsáki Lab

- Brain Computation & Behavior Lab

- ……